Explainable Artificial Intelligence or XAI in Banking Sector is a specialized area within artificial intelligence that aims to enhance the transparency of AI systems by clarifying their decision-making processes.

Introduction: XAI in Banking

Imagine being denied a loan by an algorithm you can’t understand, and the bank can’t explain why. This scenario is becoming increasingly common as AI takes center stage in financial decisions.

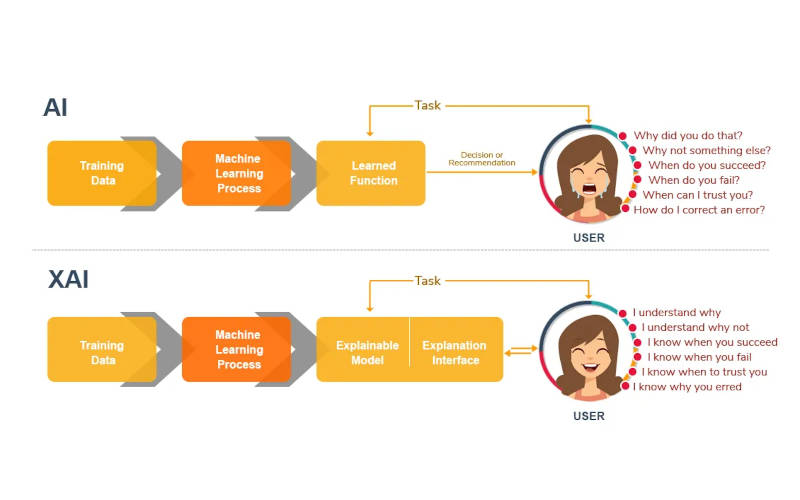

AI algorithms analyze vast transaction datasets in real-time, identifying fraudulent activity, potentially expanding access to credit, answering queries and resolving issues at speeds and volumes that are impossible for humans. While AI offers numerous benefits, its complexity can create ‘black box’ systems, where the decision-making process is opaque. This is where Explainable Artificial Intelligence (XAI) comes in. XAI aims to make AI decisions transparent and understandable to humans. It involves developing techniques and methods that allow us to understand why an AI model made a particular decision.

Therefore, the adoption of Explainable AI is not merely an option, but a critical necessity for the future of banking. By fostering transparency and understanding, XAI empowers banks to build customer trust, adhere to evolving regulatory mandates, and effectively mitigate the inherent risks associated with complex AI systems.

We will delve into the growing applications of AI in banking, explore the challenges posed by its ‘black box’ nature, and elucidate the crucial role of XAI in addressing these issues. We will then examine the various XAI techniques being employed, explore real-world case studies, and discuss the future of explainable AI in the financial industry.

The Rise of AI in Banking

A. Current AI Applications in Banking

- Fraud Detection and Prevention: AI can detect a sudden increase in international transactions from a normally local account, or a series of rapid, small-value purchases, indicating potential card skimming.

- Credit Scoring and Loan Approvals: An AI system can analyze a customer’s spending habits and suggest a budget plan, or recommend investment portfolios based on their risk tolerance and financial goals.

- Personalised Financial Advice: AI can analyze business owner’s cash flow patterns from their online sales platform to assess their creditworthiness, beyond traditional financial statements.

- Customer Service Chatbots and Virtual Assistants: A chatbot can answer common questions about account fees, or guide a customer through the process of reporting a lost or stolen card.

- Algorithmic Trading: An AI algorithm can detect a sudden spike in trading volume for a particular stock and execute a buy order before the price increases further.

B. Benefits of AI in Banking

By automating tasks, AI helps banks with Increased Efficiency and Automation, Improved Customer Experience, Reduced Operational Costs and Enhanced Risk Management.

C. The ‘Black Box’ Problem

- Many advanced AI models, particularly deep learning networks, operate as ‘black boxes.’ This means that their decision-making process is opaque and difficult to understand, even for the developers who created them. These models involve complex mathematical calculations and numerous layers of interconnected nodes, making it challenging to trace the path from input to output.

- The challenges poses for transparency and accountability: For example, if a loan application is denied by an AI system, the bank may be unable to explain the specific reasons for the denial, leaving the applicant frustrated and potentially leading to accusations of discrimination.

- Furthermore, in the event of a model error or bias, it is very difficult to debug and correct the model.

Also read: Scorecard Model in Banking – Enhancing Risk Management and Decision Making

Importance of Explainable AI (XAI) in Banking

A. Building Trust and Transparency

In an era where financial decisions increasingly impact individuals’ lives, customers have a fundamental right to understand how those decisions are made. When a loan application is rejected or a transaction is flagged as suspicious, customers deserve clear and understandable explanations. This transparency builds trust and empowers customers to make informed decisions about their finances. Similarly, regulators need to understand the decision-making processes of AI systems to ensure compliance with laws and protect consumers from unfair or discriminatory practices. Without explainability, it becomes impossible to hold AI systems accountable.

XAI provides the tools and techniques to make AI decisions transparent and understandable. By providing clear and concise explanations, banks can demonstrate that their AI systems are fair, unbiased, and reliable. This builds trust with customers, who are more likely to accept and rely on AI-driven services when they understand how they work. Furthermore, when regulators can easily audit and understand AI systems, they gain confidence in the stability and fairness of the financial system.

B. Regulatory Compliance

- GDPR’s ‘right to explanation’ is a key driver for XAI adoption in Europe, while CCAR pushes US banks to have auditable AI models.

- XAI techniques provide banks with the ability to generate explanations that can be used to demonstrate compliance with regulatory requirements.

- XAI is crucial for detecting and mitigating biases in AI models. By understanding the factors that influence AI decisions, banks can identify and address potential biases that may lead to discriminatory outcomes. For example, XAI can reveal whether a credit scoring model is unfairly penalizing individuals from certain demographic groups.

C. Risk Management

- AI models, especially complex ones, can be susceptible to errors, biases, and unexpected behaviors. XAI helps banks identify and mitigate these risks by providing insights into how the models are making decisions. By understanding the factors that influence AI decisions, banks can identify potential weaknesses and vulnerabilities in their models and take steps to address them. This proactive approach to risk management is essential for ensuring the stability and reliability of AI-driven systems.

- XAI enables banks to understand the specific factors that are driving AI decisions. This understanding is crucial for assessing the validity and reliability of the models. For example, XAI can reveal whether a fraud detection model is relying on irrelevant or misleading factors. By understanding the factors that influence AI decisions, banks can ensure that their models are making sound and reliable judgments.

- XAI makes it possible to trace errors back to their source, enabling faster and more effective model improvements.

D. Ethical Considerations

- AI systems in banking must be designed and deployed in a way that ensures fairness and avoids discriminatory outcomes and avoiding discrimination, banks can build trust and maintain ethical standards.

- XAI helps banks establish accountability by providing clear and understandable explanations for AI decisions. This enables banks to demonstrate that their AI systems are operating within ethical and legal boundaries and to take responsibility for any adverse outcomes.

- When AI makes a mistake, XAI provides the evidence needed to understand why, and who is responsible for correcting it.

Also Read: Stress Testing in Predictive Model Building in Banks

XAI Techniques and Methodologies

A. Common XAI Techniques

- LIME (Local Interpretable Model-agnostic Explanations): LIME explains the predictions of any machine learning classifier by approximating it locally with an interpretable model (e.g., a linear model). It perturbs the input data, observes how the prediction changes, and then fits a simple, interpretable model to those changes. This provides a local explanation of why a particular prediction was made for a specific instance.

- SHAP (SHapley Additive exPlanations): SHAP uses game theory to explain the output of any machine learning model. It calculates the contribution of each feature to the prediction, based on Shapley values. These values represent the average marginal contribution of a feature across all possible combinations of features. SHAP provides a global understanding of feature importance and local explanations for individual predictions.

- Rule-based Explanations: Rule-based explanations extract human-understandable rules from complex AI models. These rules can be expressed in ‘if-then’ statements, making it easy to understand the logic behind the model’s decisions. For example, ‘If income is above X and credit score is above Y, then loan is approved.’

- Decision Trees: Decision trees are inherently interpretable models that represent decision-making processes as a series of branching decisions. They visualize the flow of information and the conditions that lead to specific outcomes. In banking, they can be used to explain credit scoring decisions or fraud detection rules.

- Feature Importance Analysis: Feature importance analysis ranks the input features based on their contribution to the model’s predictions. This helps identify the most influential factors driving the model’s decisions. In banking, it can reveal which factors are most important in determining loan approvals or fraud risks.

- Saliency Maps: Saliency maps are used to visualize the parts of an input that are most relevant to the model’s prediction. They are often used in image recognition tasks, but can also be adapted to other domains. In banking, they could potentially highlight the specific data points that triggered a fraud alert.

B. How these techniques are applied in banking contexts:

- LIME for Loan Approvals: LIME can show that for John Doe, his low credit score, and recent late payments were the main reasons for loan denial.

- SHAP for Fraud Detection: SHAP can show that for a specific fraud detection event, location and transaction time were unusually high contributing factors.

- Visualisation for Model Behaviour: Visualizing feature importance helps to see at a glance what data inputs are most important to the AI’s output.

C. Challenges in Implementing XAI

- Balancing Accuracy and Interpretability: Finding the sweet spot between a highly accurate ‘black box’ and a less accurate, but transparent, model is a major challenge.

- Scaling XAI for Complex Models: Explaining a model with millions of parameters requires significant computational resources and efficient algorithms.

- User Interface Design: It is not enough to generate explanations; they must be presented in a way that is accessible and meaningful to the end-user.

Case Studies and Real-World Applications

A. Banks Implementing XAI Successfully

- One major international bank implemented SHAP values to enhance their fraud detection system. By understanding the key features that contributed to fraud alerts, they were able to refine their algorithms and reduce false positives by a significant margin. This resulted in a more efficient fraud detection process and improved customer satisfaction. Another regional bank utilized LIME to explain loan denial decisions to customers. This allowed them to provide clear and actionable feedback, helping customers understand how to improve their creditworthiness. This transparency fostered trust and reduced customer frustration.

- A digital-first bank used decision trees to create transparent credit scoring models. This allowed them to offer personalized financial advice, explain credit decisions, and increase loan approval rates among underserved populations by demystifying the process.

- The international bank using SHAP saw a 20% reduction in false positive fraud alerts, leading to a savings of $X million annually. The regional bank using LIME reported a 15% increase in customer satisfaction scores related to loan application feedback. The digital bank reported a 10% increase in loan approvals from previously underserved communities.

B. Best Practices for XAI Implementation

Banks should adopt a phased approach to XAI implementation, starting with pilot projects in specific areas, such as fraud detection or loan approvals. It is essential to involve stakeholders from different departments, including risk management, compliance, and customer service, in the process. Integrating XAI into existing data pipelines and model development frameworks is also crucial for ensuring seamless implementation. Prioritize XAI tools that can be easily integrated with current systems.

Explanations should be tailored to the audience. Customers may require simpler explanations than regulators or auditors. Visual aids, such as charts and graphs, can be helpful for conveying complex information. Use clear and concise language, avoiding technical jargon whenever possible. Provide actionable insights that empower customers to make informed decisions. Train staff to effectively communicate XAI generated explanations to customers.

C. Future Trends in XAI

- Advancements in XAI Techniques: Researchers are actively developing new XAI techniques that can handle more complex AI models and provide more nuanced explanations. This includes advancements in causal inference, counterfactual explanations, and explainable reinforcement learning. The development of automated XAI tools and platforms will also make it easier for banks to implement and scale XAI solutions. The integration of knowledge graphs and natural language generation will allow for more human like explanations.

- Stronger AI Governance: As AI becomes more prevalent in banking, ethical considerations and governance frameworks will become increasingly important. Banks need to establish clear guidelines and policies for the responsible use of AI, including XAI. This includes addressing issues such as bias, fairness, and accountability. Regulatory bodies will also play a crucial role in shaping the ethical landscape of AI in banking.

- Evolving Regulations: Regulatory bodies are increasingly focusing on the transparency and explainability of AI systems. We can expect to see more specific regulations and guidelines related to XAI in the coming years. Regulators will likely require banks to provide evidence of their XAI practices and demonstrate compliance with relevant standards.

Conclusion

- Explainable Artificial Intelligence is not a passing trend. It is the bedrock upon which the future of trustworthy AI-driven banking will be built.

- While XAI offers immense benefits, it also presents technical and practical challenges that must be addressed to ensure its successful implementation

- Explainable Artificial Intelligence is not just about explaining AI decisions; it’s about building a financial future where technology and trust go hand in hand

- Don’t wait for regulations to force your hand. Proactively embrace XAI and become a leader in responsible AI banking