Scorecard Model in Banking: Enhancing Risk Management and Decision Making

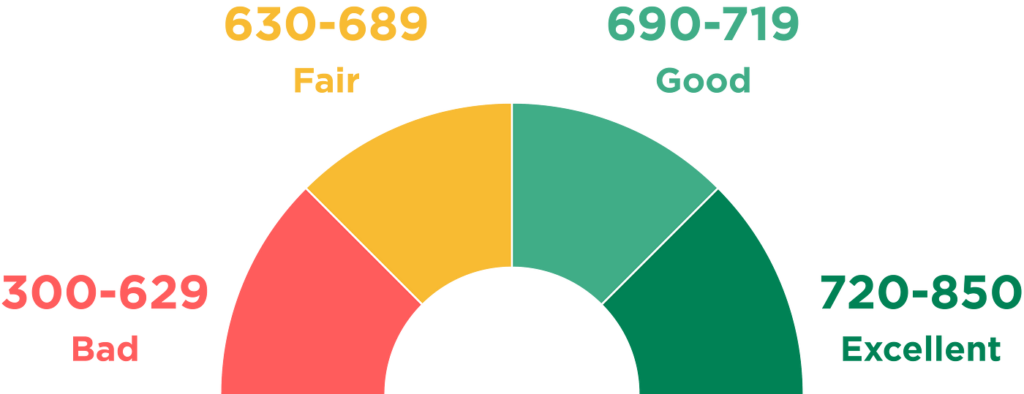

A scorecard model is a statistical tool used to assess risk, often in loan applications. It analyzes borrower data like income and credit history, assigning points to different factors. These points are totaled to create a score that predicts the likelihood of loan repayment. Scorecards help lenders make objective decisions and streamline the approval process. […]

Scorecard Model in Banking: Enhancing Risk Management and Decision Making Read More »