Neural Network or Artificial Neural Network (ANN) is one of the frequently used buzzwords in analytics these days. ANN is a machine learning technique which enables a computer to learn from the observational data. Neural Network in computing is inspired by the way biological nervous system process information.

Biological neural networks consist of interconnected neurons with dendrites that receive inputs. Based on these inputs, they produce an output through an axon to another neuron.

The term “neural network” is derived from the work of a neuroscientist, Warren S. McCulloch and Walter Pitts, a logician, who developed the first conceptual model of an artificial neural network. In their work, they describe the concept of a neuron, a single cell living in a network of cells that receives inputs, processes those inputs and generates an output.

There are several kinds of artificial neural networks. These type of networks are implemented based on the mathematical operations and a set of parameters required to determine the output.

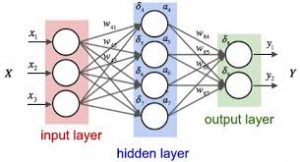

In the computing world, neural networks are organized on layers made up of interconnected nodes which contain an activation function. These patterns are presented to the network through the input layer which further communicates it to one or more hidden layers. The hidden layers perform all the processing and pass the outcome to the output layer.

Understanding Neural Network Process

With approximately 100 billion neurons, the human brain processes data at speeds as fast as 268 mph! In essence, a neural network is a collection of neurons connected by synapses. This collection is organized into three main layers: the input layer, the hidden layer and the output layer. You can have many hidden layers, which is where the term deep learning comes into play. In an artificial neural network, there are several inputs, which are called features, and produce a single output, which is called a label.

The circles represent neurons while the lines represent synapses. The role of a synapse is to multiply the inputs and weights. You can think of weights as the “strength” of the connection between neurons. Weights primarily define the output of a neural network. However, they are highly flexible. After that, an activation function is applied to return an output.

At its core, neural networks are simple. They just perform a dot product with the input and weights and apply an activation function. When weights are adjusted via the gradient of loss function, the network adapts to the changes to produce more accurate outputs.