Big Data Analytics for Risk Management in Banking Sector

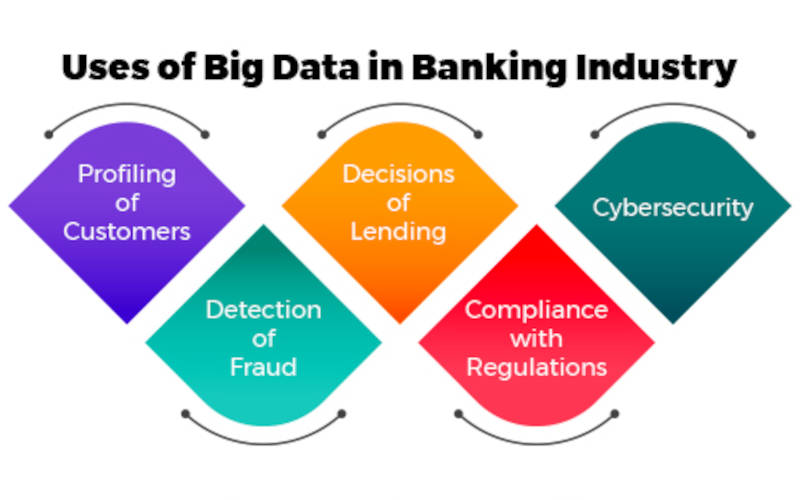

Banks face diverse challenges to manage risk with simple spreadsheets and gut feelings. Banks choose Big Data Analytics for Risk Management

Big Data Analytics for Risk Management in Banking Sector Read More »